jigdo API

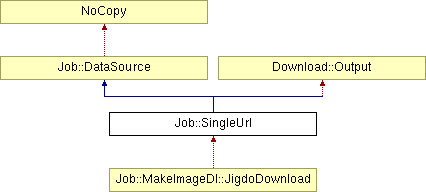

Job::SingleUrl Class Reference

Class which handles downloading a HTTP or FTP URL. More...

#include <single-url.hh>

Public Member Functions | |

| SingleUrl (const string &uri) | |

| Create object, but don't start the download yet - use run() to do that. | |

| virtual | ~SingleUrl () |

| void | setResumeOffset (uint64 resumeOffset) |

| Set offset to resume from - download must not yet have been started, call before run(). | |

| void | setDestination (BfstreamCounted *destStream, uint64 destOffset, uint64 destEndOffset) |

| Behaviour as above. | |

| virtual void | run () |

| Behaviour as above. | |

| int | currentTry () const |

| Current try - possible values are 1. | |

| virtual bool | paused () const |

| Is the download currently paused? From DataSource. | |

| virtual void | pause () |

| Pause the download. | |

| virtual void | cont () |

| Continue downloading. | |

| void | stop () |

| Stop download. | |

| bool | resuming () const |

| Are we in the process of resuming, i.e. | |

| bool | failed () const |

| Did the download fail with an error? | |

| bool | succeeded () const |

| Is download finished? (Also returns true if FTP/HTTP1.0 connection dropped). | |

| virtual const Progress * | progress () const |

| Return the internal progress object. | |

| virtual const string & | location () const |

| Return the URL used to download the data. | |

| BfstreamCounted * | destStream () const |

| Return the registered destination stream, or null. | |

| bool | resumePossible () const |

| Set destination stream, can be null. | |

| void | setNoResumePossible () |

| "Manually" cause resumePossible() to return false from now on. | |

Static Public Attributes | |

| static const unsigned | RESUME_SIZE = 16*1024 |

| Number of bytes to download again when resuming a download. | |

| static const int | MAX_TRIES = 20 |

| Number of times + 1 a download for the same URL will be resumed. | |

| static const int | RESUME_DELAY = 3000 |

| Delay (millisec) before a download is resumed automatically, and during which a "resuming..." message or similar can be displayed. | |

Detailed Description

Class which handles downloading a HTTP or FTP URL.It is a layer on top of Download and provides some additional functionality:

-

Maintains a Progress object (for "time remaining", "kB/sec")

-

Does clever resumes of downloads (with partial overlap to check that the correct file is being resumed)

-

Does pause/continue by registering a callback function with glib. This is necessary because Download::pause() must not be called from within download_data()

-

Contains a state machine which handles resuming the download a certain number of times if the connection is dropped.

This one will forever remain single since there are no single parties around here and it's rather shy. TODO: If you pity it too much, implement a MarriedUrl.

Constructor & Destructor Documentation

| SingleUrl::SingleUrl | ( | const string & | uri | ) |

| SingleUrl::~SingleUrl | ( | ) | [virtual] |

References debug.

Member Function Documentation

| void SingleUrl::setResumeOffset | ( | uint64 | resumeOffset | ) |

Set offset to resume from - download must not yet have been started, call before run().

By default, the offset is 0 after object creation and after a download has finished. If resumeOffset>0, SingleUrl will first read RESUME_SIZE bytes from destStream at offset destOffset+resumeOffset-RESUME_SIZE (or less if the first read byte would be <destOffset otherwise). These bytes are compared to bytes downloaded and *not* passed on to the IO object.

- Parameters:

-

resumeOffset 0 to start download from start. Otherwise, destStream[destOffset;destOffset+resumeOffset) is expected to contain data from an earlier, partial download. The last up to RESUME_SIZE bytes of these will be read from the file and compared to newly downloaded data.

References RESUME_SIZE, Progress::setCurrentSize(), and Download::setResumeOffset().

Referenced by run().

| void SingleUrl::setDestination | ( | BfstreamCounted * | destStream, | |

| uint64 | destOffset, | |||

| uint64 | destEndOffset | |||

| ) |

Behaviour as above.

Defaults if not called before run() is (0,0,0).

- Parameters:

-

destStream Stream to write downloaded data to, or null. Is *not* closed from the dtor! destOffset Offset of URI's data within the file. 0 for single-file download, >0 if downloaded data is to be written somewhere inside a bigger file. destEndOffset Offset of first byte in destStream (>destOffset) which the SingleUrl is not allowed to overwrite. 0 means don't care, no limit.

Referenced by run(), and GtkSingleUrl::stop().

| void SingleUrl::run | ( | ) | [virtual] |

Behaviour as above.

Defaults if not called before run() is false, i.e. don't add "Pragma: no-cache" header.

- Parameters:

-

pragmaNoCache If true, perform a "reload", discarding anything cached e.g. in a proxy. Start download or resume it All following bytes are written to destStream as well as passed to the IO object. We seek to the correct position each time when writing, so several parallel downloads for the same destStream are possible.

Implements Job::DataSource.

Reimplemented in Job::MakeImageDl::JigdoDownload.

References Assert, debug, Progress::reset(), Download::run(), Progress::setDataSize(), setDestination(), and setResumeOffset().

Referenced by Job::MakeImageDl::JigdoDownload::run().

| int Job::SingleUrl::currentTry | ( | ) | const [inline] |

Current try - possible values are 1.

.MAX_TRIES (inclusive)

| bool SingleUrl::paused | ( | ) | const [virtual] |

Is the download currently paused? From DataSource.

Implements Job::DataSource.

References Download::paused().

| void SingleUrl::pause | ( | ) | [virtual] |

Pause the download.

From DataSource.

Implements Job::DataSource.

References Paranoid, Download::pause(), paused(), and Progress::setAutoTick().

| void SingleUrl::cont | ( | ) | [virtual] |

Continue downloading.

From DataSource.

Implements Job::DataSource.

References Download::cont(), Paranoid, paused(), and Progress::reset().

Referenced by GtkSingleUrl::stop().

| void Job::SingleUrl::stop | ( | ) | [inline] |

| bool Job::SingleUrl::resuming | ( | ) | const [inline] |

Are we in the process of resuming, i.e.

are we currently downloading data before the resume offset and comparing it?

| bool Job::SingleUrl::failed | ( | ) | const [inline] |

| bool Job::SingleUrl::succeeded | ( | ) | const [inline] |

Is download finished? (Also returns true if FTP/HTTP1.0 connection dropped).

References Download::succeeded().

| const Progress * SingleUrl::progress | ( | ) | const [virtual] |

Return the internal progress object.

From DataSource.

Implements Job::DataSource.

Referenced by GtkSingleUrl::stop().

| const string & SingleUrl::location | ( | ) | const [virtual] |

Return the URL used to download the data.

From DataSource.

Implements Job::DataSource.

References Download::uri().

| BfstreamCounted * Job::SingleUrl::destStream | ( | ) | const [inline] |

| bool Job::SingleUrl::resumePossible | ( | ) | const [inline] |

Set destination stream, can be null.

Call this after the download has failed (and your job_failed() has been called), to check whether resuming the download is possible. Returns true if resume is possible, i.e. error was not permanent and maximum number of tries not exceeded.

References Progress::currentSize(), Progress::dataSize(), Download::interrupted(), and MAX_TRIES.

| void Job::SingleUrl::setNoResumePossible | ( | ) | [inline] |

"Manually" cause resumePossible() to return false from now on.

Useful e.g. if the user of this class wanted to write to a file and there was an error doing so.

References MAX_TRIES.

Member Data Documentation

const unsigned Job::SingleUrl::RESUME_SIZE = 16*1024 [static] |

Number of bytes to download again when resuming a download.

These bytes will be compared with the old data.

Referenced by setResumeOffset().

const int Job::SingleUrl::MAX_TRIES = 20 [static] |

Number of times + 1 a download for the same URL will be resumed.

A resume is necessary if the connection drops unexpectedly.

Referenced by resumePossible(), and setNoResumePossible().

const int Job::SingleUrl::RESUME_DELAY = 3000 [static] |

The documentation for this class was generated from the following files:

- job/single-url.hh

- job/single-url.cc

Generated on Tue Sep 23 14:27:43 2008 for jigdo by

1.5.6

1.5.6